What is the most important discovery science ever made?

That truth exists.

Science, logic, emotion, even truth, are all local. Whereas the universe, as far as we can see, extends without bounds shrouded in more mystery than we can guess. Truth is local, but shines and makes us, locally, divine. Most of what there is has to be revealed yet [1].

But for the most important: truth exists.

***

SCIENCE POINTS OUT EXISTENCE And CREATES IT:

Demonstrate, comes from what comes out (“de“) of showing (“monstrare“). Trying to temper my seemingly fanatical pro-science mood, as if one needed to be tempered in one’s thirst for knowledge, Sagal Tisqaad made an age-old objection:

“Science has only ever explained how things work. Not what they are.”

Right. Except that things are defined by how they look and how they work… Exactly as “science” does it. And “science” means (more or less) certain knowledge. No more no less. Being against it is being against brains. But then there is more… Is an explanation itself an… object? Yes it is!Let me explain after the contemplation of the tree of life…

LOGOS IS EXISTENCE, EXISTENCE IS LOGOS:

Explaining how something works, and what it means, to work, is itself an existentialist statement. If I proffer Proposition P, there exists P.

Some may whine that is just sophistry, a mind game. However, minds are real objects.

At least, so I claim. I view the brain as a dynamic (sub) quantic object [2]… Made of patterns (neurons, axons, dendrites, neurohormonal topology, and the geometry and topology of structures we know nothing about yet, because they are too fine and dynamic for our present gross instruments of scientific inquiry to reveal; an example is the inner machinery of just one neuron, which is most certainly, in part, and among other things, a Quantum Computer, a sort of entity we barely know exist, and how to make it work a tiny bit… even with the the help of tremendous machinery…)

Ideas correspond to neural geometry-topological patterns, so to a proposition P is associated such a pattern, a pattern specific to P… which didn’t exist before, so came into being as the idea got formulated (one will generally utter the idea before really formulating it).

“In other words, logic creates existence. As John had it in the Bible…

“In the beginning was the Logos, and the Logos was with God, and the Logos was God.” (John 1;1)

Ἐν ἀρχῇ ἦν ὁ λόγος, καὶ ὁ λόγος ἦν πρὸς τὸν θεόν, καὶ θεὸς ἦν ὁ λόγος (En arkhêi ên ho lógos, kaì ho lógos ên pròs tòn theón, kaì theòs ên ho lógos.) The concept is already in Luke 1;2 and the Book of Revelation [3]…

Identifying God and Logic was explicitly done in the 11C by a prominent abbot to the fury of the Catholic church and its Pope. That was the beginning of the end of Christianism.

How did antique thinkers realize that the logos (discourse, logic) entails existence? Well, introspection: when one has an idea or song in one’s head it can feel all too real… And could, in the end, move mountains… So exist it does…

Patrice Ayme

***

P/S: If I put my hand on a red hot surface, what comes into being? Pain! Is pain a… logos… according to my conceptology above? Of course. Pain is logos, fresh or not. Pain is a pattern in the brain which comes into existence, with plenty of sense attached to it, and pain has to co-exist with the rest of pre-existing patterns in said brain…

***

[1] How can something exist locally and not globally? Casual observations show this is the case. Differential geometry is all about the interplay between local and global. That means there is a gigantic science of the interplay between local and global, which can be used… both globally and locally in various analogies, more or less valid or evocative…

***

[2] This does not depend on the first analysis, upon my SQPR, underlying CIQ (Copenhagen Interpretation of Quantum). It could well be that ideas could be viewed as classical objects.

***

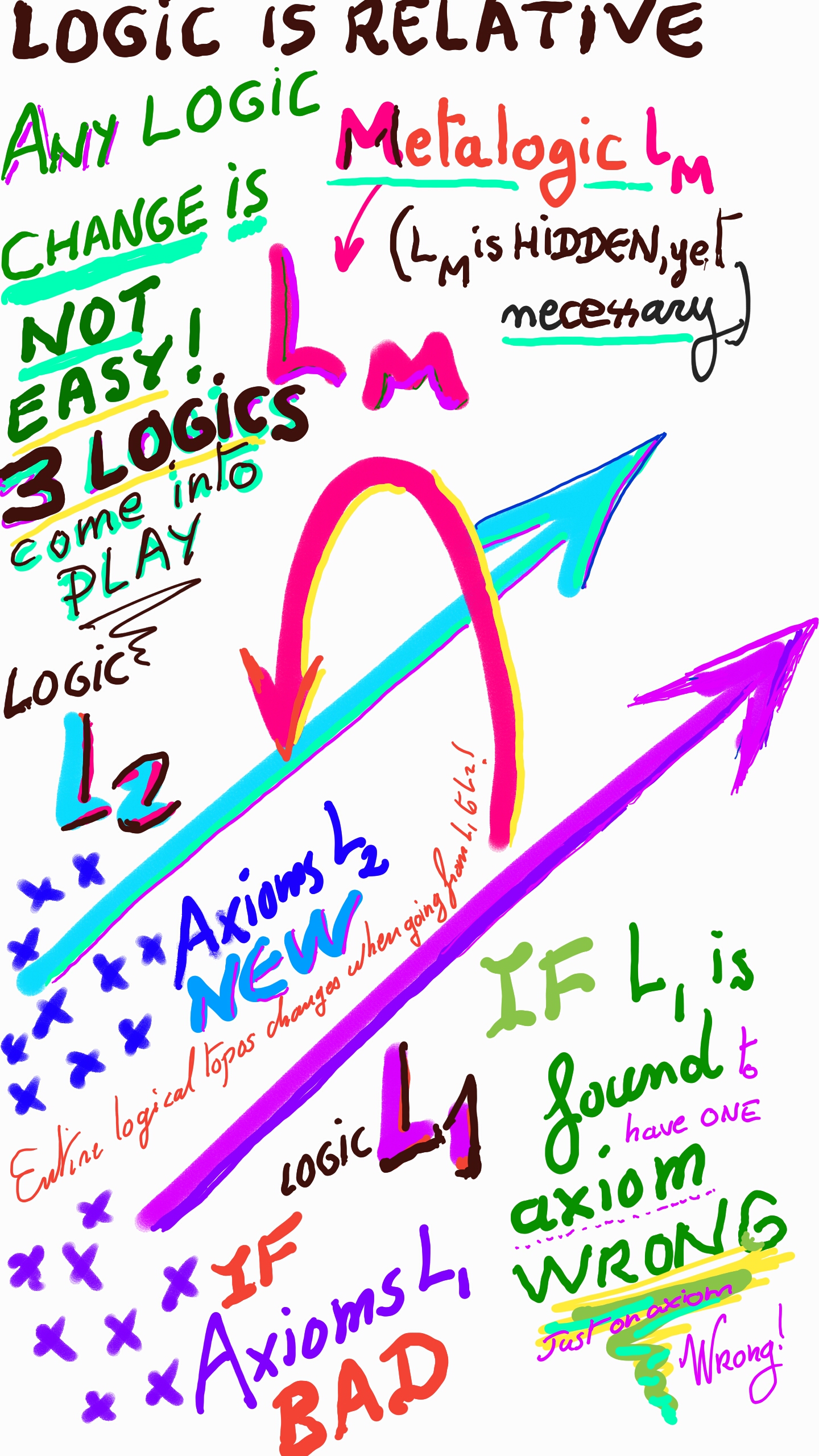

[3] λόγος, logos is often mistranslated as “word” by churchmen, who, understandably enough, fear logic, the logos. In truth logos is not just the “word”, but words with sense attached, as in Category Theory… logos, the discourse, the logic. Oh, what is logic? No logician has a wide enough concept of “logic”. It is an open problem in logic, what logic is. What I propose is that logic is any brain arrangement which makes sense (by making sense, I mean a sense like x–>y… any chain of implications, as in Category Theory…)